AI Vendor Evaluation Guide & Partner Selection Tips

Key Considerations for Small Businesses

Key Takeaways

- A structured AI vendor evaluation process can increase implementation success rates by up to 65% and save organizations from costly missteps.

- Technical capabilities, data security, integration potential, and ethical AI practices should be your primary evaluation criteria when selecting an AI partner.

- Running a limited-scope pilot project with clear success metrics is essential before committing to a full-scale AI implementation.

- Negotiating comprehensive data ownership rights and exit strategies in your AI vendor contracts protects your organization's long-term interests.

- Organizations that establish ongoing performance reviews with their AI vendors see 40% better results than those with "set-it-and-forget-it" approaches.

Choosing the wrong AI vendor can cost your organization millions in failed implementations, wasted resources, and missed opportunities. With the artificial intelligence market growing exponentially, finding the right partner has never been more critical—or more challenging. KellSolutions.com has helped hundreds of organizations navigate this complex landscape with their vendor-neutral AI advisory services, giving businesses the confidence to make smart AI investments.

The truth is that 70% of AI projects fail to deliver expected outcomes, with poor vendor selection being a primary cause. This comprehensive guide will walk you through a proven, systematic approach to evaluating and selecting AI vendors that align with your business goals and technical requirements.

Quick Guide: How to Choose the Right AI Vendor

Successful AI implementations start with careful vendor selection based on business alignment, not just technical capabilities. Before diving into detailed evaluations, create a shortlist of vendors that specialize in your specific use case, verify their track record with similar organizations, and assess their financial stability. The strongest AI partnerships come from finding vendors whose values and vision align with yours, not just those with the most impressive technical demos.

Why Most Businesses Pick the Wrong AI Partners

The most common mistake organizations make is starting with technology rather than business objectives. Too often, companies become enamored with cutting-edge AI capabilities without first identifying how these tools will solve specific business problems. Another critical error is underestimating integration complexity—many businesses select AI solutions that perform impressively in isolation but fail to work within existing technology ecosystems. In fact, 63% of AI projects fail due to misalignment with business goals.

Ignoring cultural fit and support quality also leads to partnership failures. The most sophisticated AI solution becomes worthless if your teams can't effectively implement it or if the vendor disappears after the sale. Organizations that prioritize short-term cost savings over long-term value inevitably face higher total costs as implementations struggle or fail entirely.

Finally, the lack of a structured evaluation process creates blind spots in decision-making. Without a comprehensive framework for comparing vendors, organizations often miss critical factors that would have predicted implementation challenges.

7 Essential Steps to Evaluate AI Vendors Like a Pro

A methodical approach to vendor evaluation dramatically increases your chances of successful AI implementation as part of an AI Business Transformation Project. This seven-step process has been refined through hundreds of successful AI projects and addresses both technical and business dimensions of vendor selection.

1. Define Clear Business Goals Before Talking to Vendors

Begin with precision about what success looks like for your AI initiative. Specific, measurable objectives create clarity for both your team and potential vendors. Document your current processes, pain points, and desired outcomes in detail. For example, rather than stating "improve customer service," specify "reduce customer resolution time by 30% while maintaining 90% satisfaction ratings." These concrete goals become your evaluation criteria throughout the vendor selection process.

Create a cross-functional team that includes representatives from business units, IT, data science, legal, and executive leadership to ensure comprehensive goal-setting. This diversity of perspectives helps identify potential implementation challenges early and builds organizational buy-in for the eventual solution.

2. Assess Technical Capabilities That Actually Matter

Not all technical features deliver equal business value. Focus your evaluation on capabilities directly tied to your defined goals rather than getting distracted by impressive but ultimately irrelevant functionalities. Require vendors to demonstrate their solutions using your actual data whenever possible, as performance on generic datasets rarely translates perfectly to your specific context.

Look beyond the basic feature list to understand model architecture, training methodologies, and performance metrics relevant to your use case. For critical applications, consider requesting access to technical documentation, API references, and even code samples to allow your technical team to evaluate quality and compatibility.

3. Examine Data Security and Compliance Standards

AI solutions often require access to sensitive business data, making security evaluation non-negotiable. Request detailed information about the vendor's data handling practices, including encryption standards, access controls, and retention policies. Verify compliance with relevant regulations like GDPR, HIPAA, or industry-specific requirements that apply to your business. The best vendors will offer clear documentation about their security certifications and be transparent about how they protect your information.

4. Test Integration with Your Existing Systems

Even the most powerful AI solution becomes worthless if it can't integrate with your existing technology stack. Request detailed information about APIs, connectors, and integration methods the vendor supports. The best vendors offer well-documented APIs, pre-built connectors for common enterprise systems, and dedicated integration support. Before committing, run small-scale integration tests to identify potential compatibility issues with your databases, authentication systems, or enterprise applications.

Pay special attention to data flow requirements, as AI systems often need to access information from multiple sources. Vendors should be able to clearly explain how their solution will connect to your data repositories without creating security vulnerabilities or performance bottlenecks. Consider the total integration effort required, including any custom development your team will need to perform.

5. Review Real Customer Case Studies and References

Look beyond marketing materials to understand how a vendor's solutions perform in real-world environments. Request detailed case studies from organizations similar to yours in size, industry, and use case. The most valuable references will include specific metrics on implementation timeline, ROI achieved, and challenges encountered. Be wary of vendors who can't provide references or whose case studies lack concrete results.

When speaking with references, ask probing questions about support quality, unexpected challenges, and how the vendor responded to problems. The most telling insights often come from discussions about what went wrong and how issues were resolved, not just the final successful outcome.

The Technical Evaluation Checklist You Need

Technical evaluation requires looking beyond flashy demos to understand how an AI solution will perform under real-world conditions. This checklist ensures you assess the fundamental capabilities that determine long-term success rather than getting distracted by impressive but ultimately irrelevant features. In an era where 63% of AI projects fail due to misalignment with business goals, selecting the right solution is crucial.

Model Accuracy and Performance Metrics

Request detailed performance metrics specific to your use case, not just generalized accuracy claims. Depending on your application, relevant metrics might include precision, recall, F1 score, mean average precision, or custom business-oriented measurements. Always ask how these metrics were calculated and on what type of data, as performance often varies significantly between test environments and real-world implementation.

The best vendors will be transparent about their models' limitations and edge cases where performance might degrade. Ask specifically about performance stability over time and how the model handles unexpected inputs or edge cases. For critical applications, consider requesting a third-party validation of performance claims rather than relying solely on vendor-provided metrics.

Explainability Features for Business Users

AI solutions that function as "black boxes" create significant business risks, from regulatory compliance issues to user trust problems. Evaluate what explainability features the vendor provides to help non-technical stakeholders understand model decisions. The most useful explainability tools translate complex model operations into business-friendly visualizations and natural language explanations that help users build appropriate trust in the system.

For regulated industries, verify that the explainability features meet relevant transparency requirements and can support audit processes. Even in non-regulated contexts, clear explanations of AI decisions help with user adoption and allow business users to identify potential model errors before they cause significant problems.

Scalability and Infrastructure Requirements

Many AI solutions perform well with limited data volumes but encounter performance or cost issues at scale. Request detailed information about how the vendor's solution scales with increasing data volumes, user counts, and computational complexity. Understand the infrastructure requirements for both development and production environments, including compute resources, storage needs, and specialized hardware like GPUs.

Ask vendors about their largest implementations and typical scaling limitations. The most transparent vendors will provide clear guidance on expected performance at different scales and be honest about where their solution might struggle. For cloud-based offerings, request detailed pricing models that show how costs scale with usage to avoid budget surprises as your implementation grows.

API Documentation and Developer Resources

The quality of developer resources often predicts implementation success more accurately than feature lists. Request access to API documentation, code samples, SDKs, and developer portals to evaluate completeness and clarity. High-quality documentation includes comprehensive endpoint descriptions, clear authentication methods, thorough error handling guidance, and practical examples in multiple programming languages.

Beyond documentation, assess what developer support resources the vendor provides, including technical forums, dedicated support channels, and implementation guidance. The best vendors maintain active developer communities and provide regular updates to their documentation as their solutions evolve.

Customization Options and Limitations

Few AI solutions work perfectly out-of-the-box for complex enterprise use cases. Understand what customization options the vendor provides, from simple configuration settings to model fine-tuning and custom development opportunities. The most flexible solutions offer multiple customization paths, allowing technical teams to modify model behavior without requiring vendor intervention for every change.

Red Flags That Signal a Bad AI Vendor

Identifying problematic vendors early saves tremendous time, money, and organizational frustration. Watch for these warning signs that indicate a vendor might not deliver on their promises or could create significant implementation challenges for your organization.

Vague Answers About Data Usage

Reputable AI vendors provide crystal-clear explanations about how they use your data, particularly regarding training, storage, and privacy. Be immediately suspicious of vendors who give evasive or overly technical responses when asked about data usage rights, security measures, or retention policies. Legitimate concerns include whether your data is used to train models for other customers, how long data is retained after contract termination, and what security measures protect your information during processing.

The most trustworthy vendors provide these details in writing, offer customized data handling agreements for sensitive industries, and are willing to undergo security audits. If a vendor resists providing clear documentation about data practices, consider it a major warning sign regardless of how impressive their technology appears.

Missing or Limited Case Studies

Credible AI vendors have a portfolio of successful implementations they're eager to share. If a vendor can't provide detailed case studies or customer references similar to your use case, it suggests limited real-world experience. Even more concerning is when references are available but only for dramatically different industries or applications than yours. This often indicates the vendor is stretching their expertise beyond proven capabilities.

Be particularly cautious if case studies lack specific metrics or concrete outcomes. Vague success stories without measurable results suggest the vendor may not have delivered meaningful business value. The strongest vendors provide reference customers willing to speak candidly about their experience, not just carefully scripted testimonials.

Poor Documentation and Support

The quality of a vendor's documentation often reflects their overall professionalism and commitment to customer success. Request sample documentation early in the evaluation process and assess its completeness, clarity, and technical accuracy. Missing or outdated documentation signals that implementation will likely require excessive reliance on vendor personnel, creating bottlenecks and dependencies.

Similarly, evaluate support responsiveness during the sales process as it typically represents the best-case scenario. If pre-sales support is slow, disorganized, or unhelpful, post-implementation support will likely be worse. Ask about support tiers, escalation procedures, and guaranteed response times for critical issues. The most reliable vendors offer convenient support options for production systems and have clearly defined processes for handling urgent problems.

Unwillingness to Offer Pilot Programs

Reputable AI vendors understand the importance of proving their value in your specific environment before full-scale deployment. Be skeptical of vendors who resist pilot programs or insist on jumping directly to enterprise-wide implementation. This reluctance often signals lack of confidence in their solution's performance under real-world conditions or concerns about meeting the expectations they've set during sales discussions.

The most trustworthy vendors actively encourage pilot programs with clear success metrics and defined evaluation periods. They view these limited implementations as opportunities to demonstrate value and refine their approach for your specific needs. If a vendor attempts to bypass this critical validation step, consider it a significant warning sign regardless of other promises.

How to Run an Effective AI Pilot Project

Pilot projects, taking advantage of emerging ai trends, provide crucial real-world validation of an AI vendor's capabilities within your specific environment. A well-designed pilot creates a low-risk opportunity to assess both the technology and the vendor relationship before making significant investments. Follow these best practices to maximize the value of your AI pilot programs.

Setting Clear Success Metrics

Define specific, measurable criteria that will determine pilot success before the project begins. These metrics should directly connect to your business objectives rather than technical specifications alone. For example, a customer service AI might be evaluated on accuracy of response, resolution time reduction, customer satisfaction scores, and agent productivity improvements. Document these criteria in writing with the vendor to ensure shared understanding of what constitutes success.

Include both quantitative and qualitative metrics in your evaluation framework. While data-driven measurements provide objective assessment, user feedback about experience and workflow integration often reveals critical issues that numbers alone might miss. The best success frameworks balance performance metrics with adoption indicators and business impact measurements.

Limiting Scope and Timeframe

Effective pilots have clearly defined boundaries to prevent scope creep and enable timely decision-making. Select a specific business process or department where the AI solution can demonstrate meaningful impact without requiring extensive integration. The ideal scope is narrow enough to implement quickly but substantial enough to validate real business value.

Establish a firm timeline that includes implementation, testing, and evaluation phases. Most successful AI pilots run between 4-12 weeks, depending on complexity. Longer pilots risk losing momentum and stakeholder interest, while shorter ones may not provide sufficient data for confident decision-making. Create a detailed project plan with specific milestones and check-in points to maintain focus throughout the pilot period.

Involving the Right Stakeholders

Include representatives from all groups affected by or involved with the AI solution. This typically means bringing together business users, IT staff, data scientists, and executive sponsors. Each stakeholder group brings different perspectives that contribute to comprehensive evaluation. Business users provide practical feedback on usability and workflow integration, while technical teams assess implementation requirements and system compatibility.

Designate clear roles and responsibilities for the pilot, including a dedicated project manager who coordinates between stakeholders and the vendor. Establish regular communication channels for sharing progress updates and addressing issues quickly. The most successful pilots create opportunities for direct interaction between end-users and the vendor's technical team to streamline problem-solving and feature refinement.

Critical Contract Terms to Negotiate

The vendor contract establishes the foundation for your AI partnership and deserves careful attention during negotiations. These key terms have significant long-term implications for your organization's flexibility, costs, and risk exposure.

Data Ownership and Usage Rights

Clearly establish ownership of all data provided to the vendor and any insights generated through their AI systems. Your contract should explicitly state that your organization maintains complete ownership of input data, while also addressing rights to the outputs and insights created. Watch for clauses that grant vendors broad rights to use your data for model training or product development beyond your specific implementation.

The strongest agreements include detailed data handling provisions that specify permitted uses, security requirements, and confidentiality obligations. For sensitive industries, consider including audit rights that allow you to verify the vendor's compliance with these data provisions. Never accept vague language about data ownership or usage rights that could be interpreted to the vendor's advantage.

Model Training and Intellectual Property

Determine who owns the intellectual property rights for AI models trained using your data or customized for your specific needs. Standard vendor agreements often claim ownership of all models and algorithms, but this may not serve your interests if you've invested significant resources in customization. For strategically important implementations, negotiate for at least joint ownership of custom models or exclusive licensing arrangements that prevent your competitors from benefiting from your investment.

Similarly, clarify ownership of any custom features, integrations, or workflows developed during implementation. The most balanced agreements recognize the vendor's ownership of core technology while granting your organization rights to customizations you've funded. If the vendor will be creating custom code for your implementation, ensure your contract includes source code escrow provisions that protect your access in case the vendor goes out of business.

Service Level Agreements (SLAs)

Detailed SLAs establish clear performance expectations and accountability mechanisms for your AI solution. Focus on metrics that impact business operations rather than just technical specifications. Critical SLA components include system availability (uptime), response times, issue resolution timeframes, and model performance guarantees. The agreement should define different severity levels for issues and corresponding response commitments.

Ensure the SLA includes meaningful consequences for missed performance targets, typically in the form of service credits or fee reductions. The most effective SLAs include escalating penalties for repeated or prolonged failures rather than token credits that don't motivate vendor performance. Regularly scheduled SLA reviews should be built into the contract to allow for adjustments as your business needs evolve.

Exit Strategy and Data Portability

Even the most promising vendor relationships eventually end, making exit provisions critical for protecting your long-term flexibility. Your contract should outline a clear process for termination, including data extraction, transition assistance, and knowledge transfer. Specify the format in which your data will be returned and ensure it includes not just raw data but also metadata, model configurations, and performance histories needed to migrate to a new solution.

Building a Long-Term AI Partnership

Case Study: Global Financial Services Firm

A leading financial institution established quarterly innovation workshops with their AI vendor, resulting in three major capabilities not in the original roadmap. This collaborative approach delivered $4.2M in additional value over two years while reducing implementation time for new features by 40% compared to their previous vendor relationship model.

The most successful AI implementations evolve from vendor-customer transactions into genuine strategic partnerships. Organizations that invest in relationship development rather than treating vendors as interchangeable suppliers consistently report higher satisfaction and better business outcomes from their AI initiatives.

True partnerships require investment from both parties. The best vendors commit senior resources to understanding your business strategy and proactively identify opportunities to deliver additional value. From your side, sharing strategic priorities and performance feedback helps vendors align their development roadmaps with your needs.

Create formal governance structures that bring together business and technical stakeholders from both organizations on a regular cadence. These standing committees provide forums for addressing issues, planning enhancements, and ensuring continuous alignment between the AI solution and evolving business requirements.

Joint Innovation Roadmaps

Develop shared technology roadmaps that align vendor development plans with your business strategy. These collaborative plans should identify upcoming features, integration opportunities, and potential use case expansions over 12-24 month horizons. The most effective roadmaps include both technical milestones and expected business outcomes, creating accountability for delivering real value rather than just new features.

Regular Performance Reviews

Establish a structured cadence for reviewing the AI solution's performance against business objectives. These reviews should go beyond basic SLA compliance to examine user adoption, workflow integration, and business impact metrics. The best review frameworks incorporate both quantitative measurements and qualitative feedback from users and stakeholders.

Use these reviews to identify both improvement opportunities and expansion possibilities. Vendors who actively participate in honest performance assessments and take ownership of improvement initiatives demonstrate the commitment to partnership that characterizes the most successful AI implementations.

Knowledge Transfer Protocols

Create formal processes for sharing expertise between your organization and the vendor. Effective knowledge transfer ensures your team develops the skills needed to maximize value from the AI solution while reducing dependency on vendor resources for routine operations. This might include technical training, process documentation, or embedding vendor experts within your teams during critical phases.

The most mature partnerships establish two-way knowledge exchange where vendors gain deeper understanding of your industry challenges while your teams build technical capabilities. This mutual development creates a virtuous cycle where both organizations continuously enhance their ability to deliver value through the AI solution.

Frequently Asked Questions

These common questions address the practical concerns organizations face when evaluating and selecting AI vendors. The answers reflect best practices gathered from hundreds of successful AI implementations across industries.

How long should an AI vendor evaluation process take?

A thorough AI vendor evaluation typically requires 6-12 weeks, depending on solution complexity and organizational requirements. The process includes requirements definition (1-2 weeks), vendor research and shortlisting (1-2 weeks), detailed evaluations including demos and technical reviews (2-4 weeks), and final selection with contract negotiation (2-4 weeks). Rushing this process significantly increases implementation risks and often leads to selecting vendors that don't align with long-term needs.

What's the typical cost structure for AI solutions?

AI pricing models vary widely but typically include implementation costs (20-40% of first-year total), subscription or licensing fees (typically per user or per volume), and ongoing support and maintenance (15-25% of annual licensing costs). Many vendors offer consumption-based models where you pay based on API calls, processing volume, or active users. Enterprise agreements often include tiered pricing with volume discounts and minimum commitment requirements.

Should I choose a specialized AI vendor or a full-service provider?

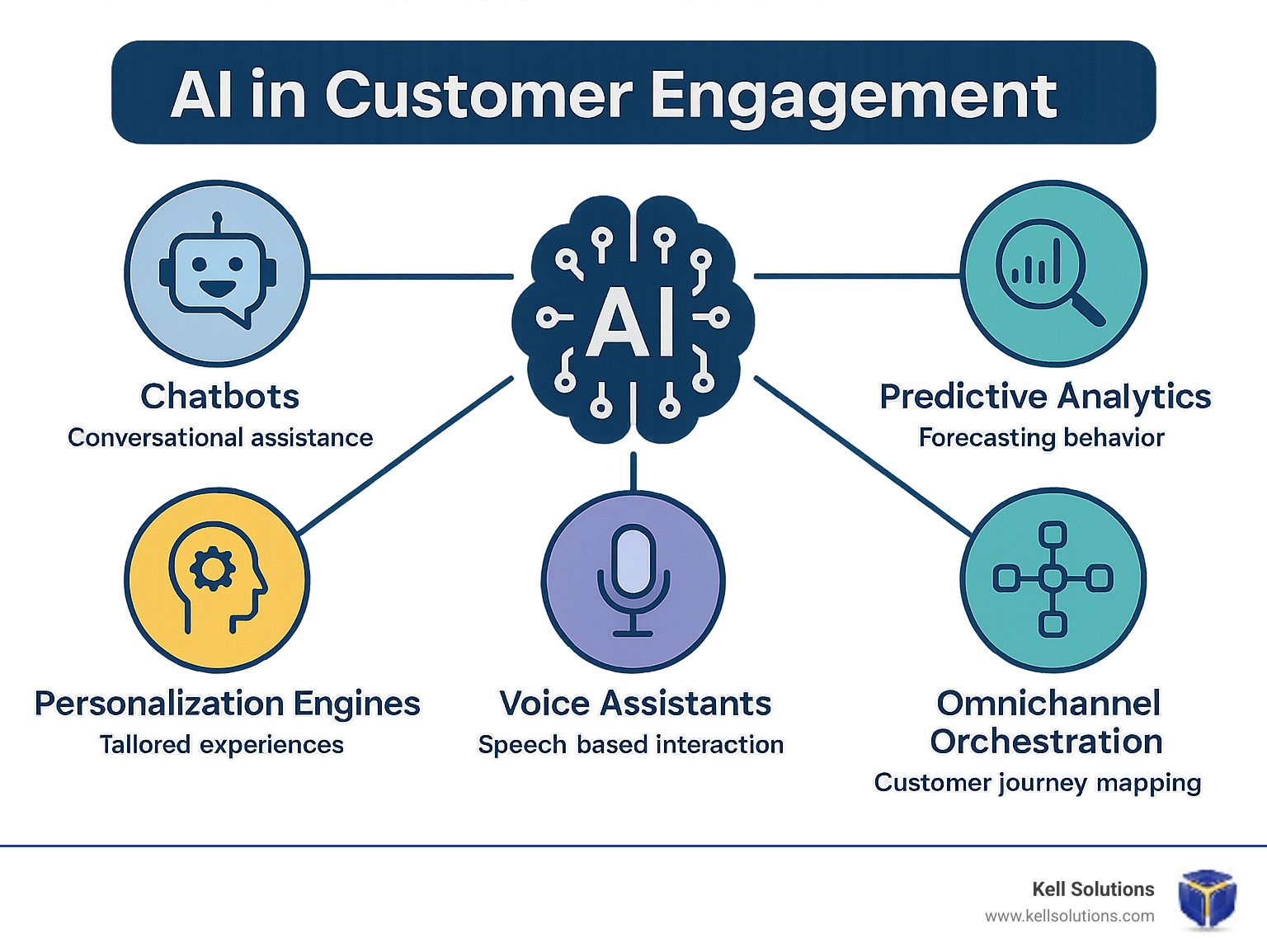

This decision depends on your specific use case, existing technology ecosystem, and internal capabilities. Specialized vendors typically offer deeper expertise in narrow domains and more advanced capabilities for specific functions like computer vision, natural language processing, or predictive analytics. These focused providers often deliver superior performance for their specialty but may create integration challenges with other systems.

Full-service providers offer broader functionality and typically provide better integration with existing enterprise systems. They're usually more appropriate for organizations seeking to implement AI across multiple functions with unified governance. The best approach often combines strategic partnerships with platform providers while leveraging specialized vendors for specific high-value use cases where their expertise delivers significant competitive advantage.

How do I measure ROI from my AI implementation?

Effective AI ROI measurement combines direct cost savings, productivity improvements, revenue impacts, and strategic value. Begin by establishing a clear pre-implementation baseline that documents current process costs, performance metrics, and resource requirements. Track both quantitative metrics (processing time, error rates, resource utilization) and qualitative outcomes (employee satisfaction, decision quality, customer experience).

The most comprehensive ROI frameworks look beyond immediate operational impacts to include strategic benefits like improved decision quality, new capabilities, and competitive differentiation. For maximum credibility, involve finance teams in ROI methodology development and validate assumptions with regular post-implementation measurements.

What certifications should I look for in an AI vendor?

Key certifications vary by industry and use case, but several provide valuable verification of vendor capabilities and practices. ISO 27001 and SOC 2 Type II certifications confirm robust information security practices, while HIPAA compliance is essential for healthcare applications. For specific AI capabilities, look for specialized certifications like Google Cloud ML expertise, Microsoft AI partner status, or industry-specific qualifications relevant to your sector.

Beyond formal certifications, evaluate vendors' commitment to responsible AI principles through their governance frameworks, bias testing methodologies, and transparency practices. Organizations like the Partnership on AI and the IEEE have established ethical AI standards that responsible vendors should acknowledge and incorporate into their development processes.

Orange County HVAC Google AI Overview Domination: 7 Proven Strategies to Capture Featured AI Results